home | portfolio | publications

Augmented Reality Assisted Multimodal Control for Robotic Arm (2009 – 2010)

credit: Roland Aigner, Media Interaction Lab

credit: Roland Aigner, Media Interaction Lab

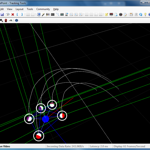

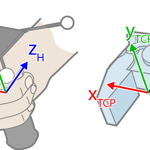

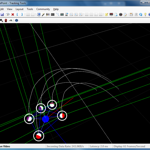

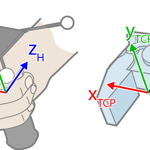

Addressing the complexity of status-quo Teach Pendant interfaces, as they are commonly used for programming industrial robots, this thesis explores a novel interface for command-and-control of robotic arms, in particular for use cases where speed outweighs precision requirements. A miniature 5-DOF robotic arm was used as a substitute for performing a user study for a multi-modal robotic interface, which combed multitouch input with command-based speech interface and 6-DOF hand pose tracking, enabled by optical marker-based rigid body tracking. The latter was used to create an instantaneous 1-to-1 translation of the operator’s arm movement to those of the robot, establishing immediate and intuitive control. A see-through Augmented Reality visualization on a multitouch screen was supporting with spatially co-located process information. For validation, a user study was conducted to gain both quantitative and qualitative data, which is presented in the thesis.

Context: MSc thesis

Contribution: overall concept and interaction design, implementation of multimodal command-and-control interface, including multitouch, speech recognition, implementation of augmented reality visualization, calibration, adaptive camera control, user study design and execution, data evaluation

Credits: Media Interaction Lab, PROMOT Automation GmbH

Gallery:

Downloads:

The Development and Evaluation of an Augmented Reality Assisted Multimodal Interaction System for a Robotic Arm (MSc Thesis)

© copyright 2025 | rolandaigner.com | all rights reserved

credit: Roland Aigner, Media Interaction Lab